AI and Elections – Observations, Analyses and Prospects

Share this Post

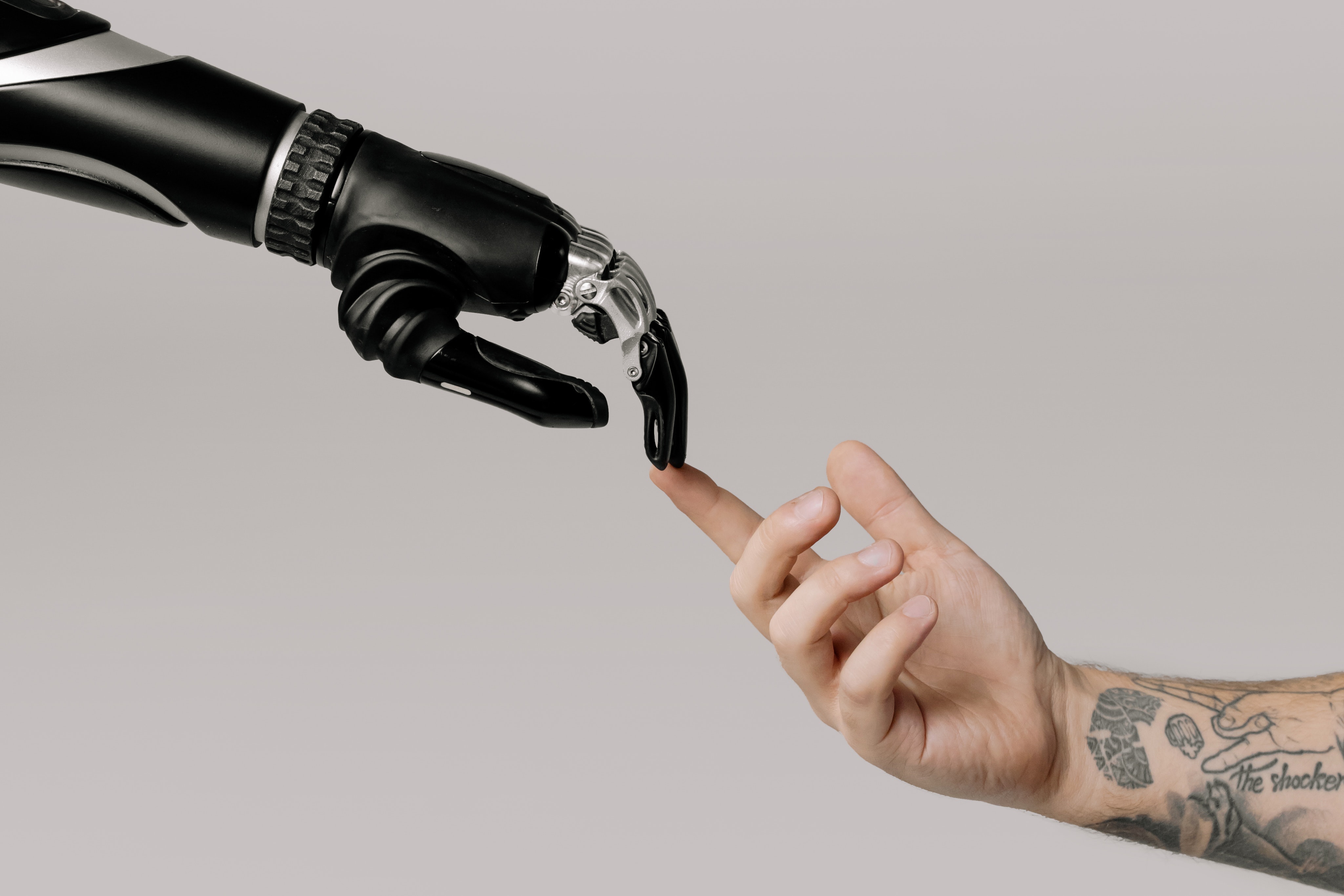

Artificial intelligence (AI) is one of the key technologies of the 21st century and a backbone of the worldwide digitalization of society, including essential areas such as business, education and health. But also, the very heart of democratic society – the political and public realm – is affected by AI systems.

The public debate on artificial intelligence fluctuates between two positions: Far-reaching hopes for the optimization of socially-relevant processes, and on the other hand, manifold fears such as loss of control, surveillance, dependency and discrimination. This polarized form of debate can also be seen in the relationship between democracy and AI. In many cases, AI systems are seen primarily as a threat to democracy. At the center of this is the feared manipulation of the will of voters, for example by adaptive social bots, by individual voter influence and misuse of data, or by means of influencing elections by external intelligence services. On the other hand, hopes are regularly expressed that AI can foster social welfare, even to the point of speculative considerations that it might simply replace democracy at some future point because of its ability to gauge the public will in a manner superior to politics through optimizing calculations and management.

Background

AI is a driver or so-called enabler of important fields of technological progress, for instance in the fields of medicine or mobility. At the same time AI has a strong impact on individual communication and also on the shape of the public sphere. It helps filter and sort content, prioritize information (by specific algorithmic rules), and personalize applications to achieve a finely tailored level of user experience. The purpose of information transmission in digital communication networks is increasingly driven by individual needs, not by the active creation of a political public sphere. One reason for this is that platform operators, who pursue commercial interests, dominate information transmission. We thus see a conflict of interests in the DNA of digital public spheres: While private platform operators use the Internet to provide public communication, they do not aim at democratic opinion forming, but rather at advancing their commercial interests through data collection and personalized advertising.

Technologically, the personalization of information technology is reflected in the deployment of learning AI-systems. They are primarily used by search engines and social media. They process data about the respective interests of individuals, but they do not strive for social understanding and political dialogue. That’s a comprehensive challenge for democratic societies in digital transformation. But how about the particular impact of AI on elections as the heart of democratic participation?

A group of independent experts from Germany’s Platform for AI of the Federal Ministry of Education and Research (BMBF) and the National Academy of Science and Engineering (acatech) thoroughly examined the potential and risks that AI posed to the elections in Germany in September 2021. Drawing from their work, this Spotlight introduces the most important and currently significant AI-driven opportunities to influence elections and individual opinion forming.

This Spotlight explores how the ability of AI to disseminate information more effectively, considering both the intended audience and the appropriate timing and channel, is prone to abuse and can pose a threat to democracy. After this description of threats from AI in the context of disinformation, microtargeting and fake content, the text discusses the preconditions for its democratic use and the potential of AI to support the building of a critical public sphere.

The impact of AI Systems on elections is especially significant in the field of information gathering and opinion formation. AI helps to evaluate and organize information so that a meaningful selection can be made for each individual out of the flood of information on the Internet. AI can be used to disseminate information more effectively, considering both the intended audience and the appropriate timing and channel. These new possibilities are often discussed in the context of abuse scenarios, which will be explained in the following. After this description of threats from AI in the context of disinformation, microtargeting and fake content, I will discuss the preconditions for its democratic use and the potential of AI to support the building of a critical public sphere.

Use of AI for Disinformation

A possible risk of AI is the manipulation of individual voting decisions prior to an election through targeted disinformation campaigns. Search engines and social networks use different criteria than the press media to disseminate information. Search engines and social networks mainly present third-party content and offer little content of their own. This means that even non-professionally produced, diverse information can quickly achieve a wide reach. In addition, the information is screened and weighted by algorithms to meet the interests of the users, but also the expectations of the advertising customers. Users contribute to the evaluation and dissemination of content through likes, retweets, sharing, and similar functions. It is especially these functionalities of the platform economies that can easily be manipulated in an automated way. Particularly in times of crisis and in the run-up to elections, the danger of interested parties disseminating misleading information via the internet increases. Social bots based on AI processes are often used to amplify disinformation campaigns that are also carried out simultaneously in the press and on television. For example, automated social bots distribute their posts across different accounts simultaneously or disguise themselves by communicating in a human-like manner.

AI to Create Deepfakes

AI systems can also be used to create deepfakes (images, videos, or audio files manipulated by AI) that blur the lines between reality and fiction. While deepfakes are used predominantly in the pornographic field where they almost exclusively involve women, they can show also well-known politically active individuals performing actions and making statements that they never made. These public figures can then be defamed or even blackmailed with the alleged compromising image material. The aim can be to influence political life or to cause the people concerned to withdraw from politics. At the same time, deepfakes also offer a “liar’s dividend” or a path of evasion for public figures criticized for actions or statements they have actually made: They increasingly claim that the image, video or audio files incriminating them are not real. For example, former U.S. President Donald Trump claim about the “Access Hollywood” video incriminating him by depicting him boasting about harassing women.

AI for personalized Advertising and Microtargeting

Personalized advertising is a legitimate venue for conveying voter information but also a tool for manipulation through microtargeting. The creation of user profiles is a prerequisite for individually and personally addressing voters. This creation of personality profiles is used primarily for personalized advertising and is thus part of the central business model of the dominant digital platforms. Some of the potential danger of manipulation in the area of politics and elections derives from these advertising capabilities (see the discussion of Cambridge Analytica). For advertising in general, relatively small rate of efficacy is sufficient to make a difference. Thus, if even only a few out of thousands of people react to the advertising, it is still worthwhile. In this respect, personalized advertising could be similarly effective in electoral systems in which very small voting advantages are often decisive. The question arises, however, as to how far these mechanisms can be transferred from advertising to the dynamics of pre-election publics, since many other factors also come into effect here, such as the emotionalization of debates, and the position of the peer group.

Assessments and Perspectives for a Desirable Application of AI in the Run-up to Elections

As we have seen, there are many possible undesirable effects of AI-driven applications on voter information and public opinion formation. Some of them are very likely already shaping public opinion. For example, the Brexit campaign has been supported by personalized wrong or pro-Brexit advertising on Facebook.

The impact of AI systems and electoral content moderation can often only be assessed in retrospect. Our initial observations of the German federal election in 2021 suggest that there was a significant amount of simple misinformation and fake news in social media as well as in the press and on television as shown by an investigation of the social campaigning network Avaaz. Fake information was mainly spread by the right wing and it can be assumed that this was in cooperation with campaigns. It can thus be concluded that even though AI is receiving increased attention as a conveyor of targeting and profiling, conventional media and established news sites also have an important and significant influence on the distribution of misinformation. AI-generated deepfakes have not played a role in the run-up to the 2021 federal election.

A second observation in the German context is that the positive assets of AI in the area of voter information and organization have not yet been fully exploited. In the following, I will discuss a helpful application of AI systems for democratic controversies and trustworthy communication. This includes a discussion of how AI systems – in addition to its better-known functions in the form of search engines and social media – could be used in the future to support information, decision-making processes and election-campaign organizing by the various parties.

AI to Counter Biased Content

Despite the aforementioned negative implications of AI on public discourse, AI can also contribute to better balancing media content. AI can help to identify biased information and provide alternative coverage. In general, but especially in the context of elections, there are tendencies toward one-sided reporting. This so-called media bias arises from a certain choice of words and topics (“framing”), which make the information appear in a certain light. Given the immense amounts of news in digital media, human observation and assessment to monitor online content is no longer possible. For this reason, platform operators are relying on the use of automated analyses that enable rapid detection and assessment of media bias and, if necessary, can serve as a basis for counter-offers. Tools, such as supplementary links with balanced information, can be offered to users by browser plug-ins to improve competencies to evaluate biased information for political opinion formation. Specific AI-based online services can provide counterarguments in the case of biased representations resulting from algorithmic filter bubbles. Social bots can also help in countering false and tendentious reporting. They can automatically disseminate verified information and, if necessary, interactively respond to questions in the context of elections and overall political opinion-forming.

Recommender Systems to Support Opinion Formation

One applied example in which AI could be increasingly used in the context of elections is election recommendation apps. “Recommender systems” in general are a typical application area of machine learning methods. Political recommendation engines are mostly developed and provided by neutral third parties. They can be a valuable aid for evaluating and comparing different election programs. The current voting advice apps barely contain any machine-based learning processes. They currently learn neither from the aggregated data nor from information collected from individual users. Therefore, each individual usage of the app thus starts again from scratch. Not only here, but also during a recommendation process, the use of additional automated elements is conceivable: Users could be “walked” through the program by a chatbot that explains the individual statements with examples or responds to questions from the users.

The embedding of such mechanisms in political information processes represents a possible field of AI system applications in political education, whereby the development of transparent, privacy-compliant, trustworthy and fair assistance systems must play a very significant role.

AI tools for Electoral Content Moderation

In the context of elections, AI systems are also increasingly being used as part of risk-management strategies, such as “electoral content moderation”. Content moderation is a strategy for curating content in social networks. The aim is to remove misinformation, hate speech, and deepfakes. Social media platforms are also increasingly using AI systems to detect suspicious patterns in content before elections, or to detect content as election advertising. Special self-regulatory platform rules now apply to such content on all known platforms.[1] In this way, AI systems can make a valuable first contribution to the detection of fake news and support citizens in reaching informed opinions.

It is to be expected that the systems will improve and that progress will be made, especially in the area of detecting false or even tendentious reporting. It can therefore be assumed that more and more tools for detecting fake news or deepfakes will be offered in the future.[2] Until now, though, most browser plug-ins and like applications need to be actively installed by the users and therefore already presuppose a critical awareness of disinformation, limiting their efficacy.

The algorithmic content moderation systems already in existence have been criticized for often being opaque, unaccountable, and poorly understood. For example, the decision as to why some content is removed, and other content is not, is not transparent and comprehensible. One of the key tools of electoral content moderation is AI-based upload filters. Upload filters which served, for example, to detect pornography by default, have been repeatedly criticized since their introduction. Reasons for this include the risk of collateral damage from false filtering or false incentives that can lead to censorship.

If the platform operators do not (yet) sufficiently fulfil their transparency obligations, academia or civil society organizations in particular can act themselves and conduct their own experiments with the modes of operation of algorithms that reconstruct to which criteria content management is subject (so-called reverse engineering). However, this method, which is reserved for experts, is usually too complex to allow for transparency for a current election campaign, offering only an a posteriori explanation of the automated content selection. In this regard, it is important to state that science and research-based non-governmental organizations must be given access to social media platforms (as also the current draft of the European Commission’s Digital Services Act requests) in order to conduct research on the effects and functionalities of AI in digital platforms.

The Way Forward

For the potential of AI to be fully realized, a secure regulatory framework is needed. Germany, like the other states of the European Union, does not yet have a sufficient regulatory framework to counter the future risks of AI. The European Commission’s regulatory proposal on AI, presented in April 2021, requires that a system be classified as high-risk if it could lead to systemic adverse effects for society as a whole, including jeopardizing the functioning of democratic processes and institutions, and civil society discourse.

Possible risks in the use of AI systems in connection with elections can be countered with various legal and technical measures as well as ethical agreements. Specifically, this involves preventing misinformation or manipulated information and the curation or automated selection of media content that is important for shaping political opinion. Key regulations include: The transparency of selection criteria and the right to explanation in the context of electoral content moderation, labeling requirements for content generated by AI as well as misinformation in general, and the enforcement of effective platform policies.

The principle of transparency in AI and algorithmic systems is of great importance. The planned Digital Services Act (DSA) requires service providers to explain in a transparent and comprehensible way which measures their in-house regulations comprise and how they work. In this context, attention is usually drawn not only to transparency, but also to comprehensibility, explainability, and the presentation of information in a way that is appropriate for the addressees. These basic principles of transparency and comprehensibility apply just as much to the use of AI and algorithmic decision systems to select information that relates to democratic elections.

A further contribution to transparency and the creation of trustworthy public communication is the obligation to label content generated by AI (synthetic media). Individuals must be made aware that they are interacting with an AI system. The European Commission’s regulatory proposal on AI already envisages a corresponding labeling obligation. Another instrument (that doesn’t rely on AI) against false and manipulated information is the labeling of content with warnings that indicate to users that fact checkers doubt the claims of the article and provide references to other verified sources. In this way, users are prevented from assuming that false and manipulated information is true and from spreading it.

The list ends with one of the most important and comprehensive tools for the realization of AI for public good: With the blocking of the Twitter account in January 2021 of then-US President Donald Trump and his exclusion from various platforms (de-platforming), the general public has become aware of the deficits of platform regulation. One starting point here would be binding standards based on the model of regulated self-regulation, as is common in Germany and many other EU countries for the media sector. Platform operators could foster the establishment of independent boards consisting of experts and representatives of civil society. Together with the operators, these councils would discuss common standards and codes of conduct on questions of algorithm-based electoral content management, as well as on all other topics relating to a pluralistic and non-discriminatory democratic public sphere. For effective implementation of these and other forms of regulation, a central European institution is needed.

Conclusion

Technology design is always also the design of society. AI applications help us to evaluate and sort content. We depend on this support for orientation and to be able to make meaningful use of the diverse information and communication opportunities. However, a democratic society is not dependent on leaving this task to private-commercial platform operators solely according to their purposes. On the contrary, democracy is called to define the framework conditions for transparent, truthful and fair public communication itself. Technologies are an integral element of the common effort to create a “good” society. For this reason, a sound design, and a well-founded assessment of possible negative side effects of technological innovations is a must. Each of us is individually required to use technologies responsibly, but without question, powerful actors and politicians have a special responsibility to create safe and ethical frameworks for a welfare-oriented development of the digital society.

[1] See, for example: Facebook; Google/YouTube; Twitter.

[2] E.g., Duck Duck Goose or 3D Universum

This Spotlight is published as part of the German-Israeli Tech Policy Dialog Platform, a collaboration between the Israel Public Policy Institute (IPPI) and the Heinrich Böll Foundation.

The opinions expressed in this text are solely that of the author/s and do not necessarily reflect the views of the Israel Public Policy Institute (IPPI) and/or the Heinrich Böll Foundation.

Share this Post

Export Control of Surveillance Software from Germany and Europe – Regulations, Limits and Weaknesses

Introduction or “The Relation Between Smartphones and Human Rights” My Smartphone is my life. Not literally, of course,…

Why 'Responsible AI' Is Good for Business

Artificial Intelligence (AI) algorithms have gained a growing influence over our society. It is not unusual to see…

Gearing up for the Digital Decade? Assessing the Enforcement Mechanisms of the EU’s Platform Regulation Bills

The EU’s goal is to become a “global role model for the digital economy” and to promote its…